很久之前看的libuv源码的时候记录的一些乱七八糟的东西ORZ。 强烈推荐直接看参考链接搞清楚文件IO和网络IO怎么实现的就行,我的记录大概只有我自己能看懂。

1 设计理念

libuv uses a thread pool to make asynchronous file I/O operations possible, but network I/O is always performed in a single thread, each loop’s thread.

Threads are used internally to fake the asynchronous nature of all of the system calls.

2 事件循环

一个死循环执行多个phase,每一个phase检查是否有事件发生,其中最重要的phase就是uv_poll_io这个阶段负责调用比如epoll这样的多路复用api监听事件, 有对应的事件发生则执行IO操作然后执行回调(比如epoll -> read -> read_cb, 记住epoll不执行实际的IO, 只是监听), 在回调中可能又会添加新的事件保证事件循环不断的进行下去。主要流程为: 执行IO -> 执行回调 -> 执行IO -> 执行回调 …

注意网络IO和文件IO是不太一致的,后者利用了线程池。

在网络IO中执行IO的任务为uv__io_t中的cb,执行位置为uv_poll_io。

在文件IO中执行的任务为uv__work的work,执行位置为线程池。

两种任务的回调都在uv_poll_io中执行。

备注: epoll_pwait设置signal然后wait可能被信号唤醒而不是因为有事件发生

2.1 uv__io_poll

实际执行poll事件的函数。以epoll为例,如果有事件发生就调用对应的io_watcher的cb函数即可

2.2 网络IO流程

https://blog.butonly.com/posts/node.js/libuv/6-libuv-stream/

uv_tcp_init

-> uv__stream_init (初始化stream)

-> uv__io_init(初始化uv__io_t: 赋值stream中的uv__io_t的cb(uv__stream_io)实际任务)

-> uv_listen ( uv_tcp_listen)

-> uv__io_start 添加IO观察者uv__io_t到loop->watchers中

-> uv_poll_io中有事件发生执行uv__stream_io, uv__stream_io执行对应的read, connect, write操作后执行对应的回调read_cb等

1 | // io_watcher的cb,会根据stream当前状态调用实际的IO任务,比如uv__read |

1 | // 添加监听IO对象 |

2.3 文件IO流程

2.3.1 线程池

初始化4个线程, 全部执行worker函数worker函数使用信号量进行休眠等待有任务提交时苏醒,苏醒后从work queue中取出任务执行,用户可以通过api提交任务到work queue同时唤醒正在休眠的线程。

2.3.2 提交任务

https://github.com/libuv/help/issues/62

uv_fs__t是文件系统请求,内部api比如uv_fs_read会产生uv_work_submit从而使用线程池处理, uv_work代表需要线程池处理的任务uv_work->work是要执行的任务,具体流程如下

uv_fs_open(初始化uv_fs__t:init->path, 然後提交任務:post)

-> uv__work_submit(初始化uv__work,赋值其中的work(uv__fs_work)和done(uv__fs_done)属性,提交任务)

-> uv_cond_signal(唤醒工作线程)

-> w->work(w) 执行实际的IO操作(uv__fs_work, uv__fs_work中会根据uv_fs_t中的fs_type映射到实际的操作系统API)

-> uv_async_send -> uv__async_send (写字符串到async_wfd, uv__io_poll 会检查所有的 file description使用epoll_wait所以会知道文件操作完成)

-> uv__io_poll中执行回调 w->cb(也就是uv__async_io,和stream中类似也是提前设置的,stream中叫uv__stream_io)

-> (uv__work_done -> uv__fs_done)

1 | static void uv__fs_work(struct uv__work* w) { |

3 数据结构

1 | struct uv_loop_s { |

两种IO请求

1 | struct uv__io_s { |

1 | struct uv__work { |

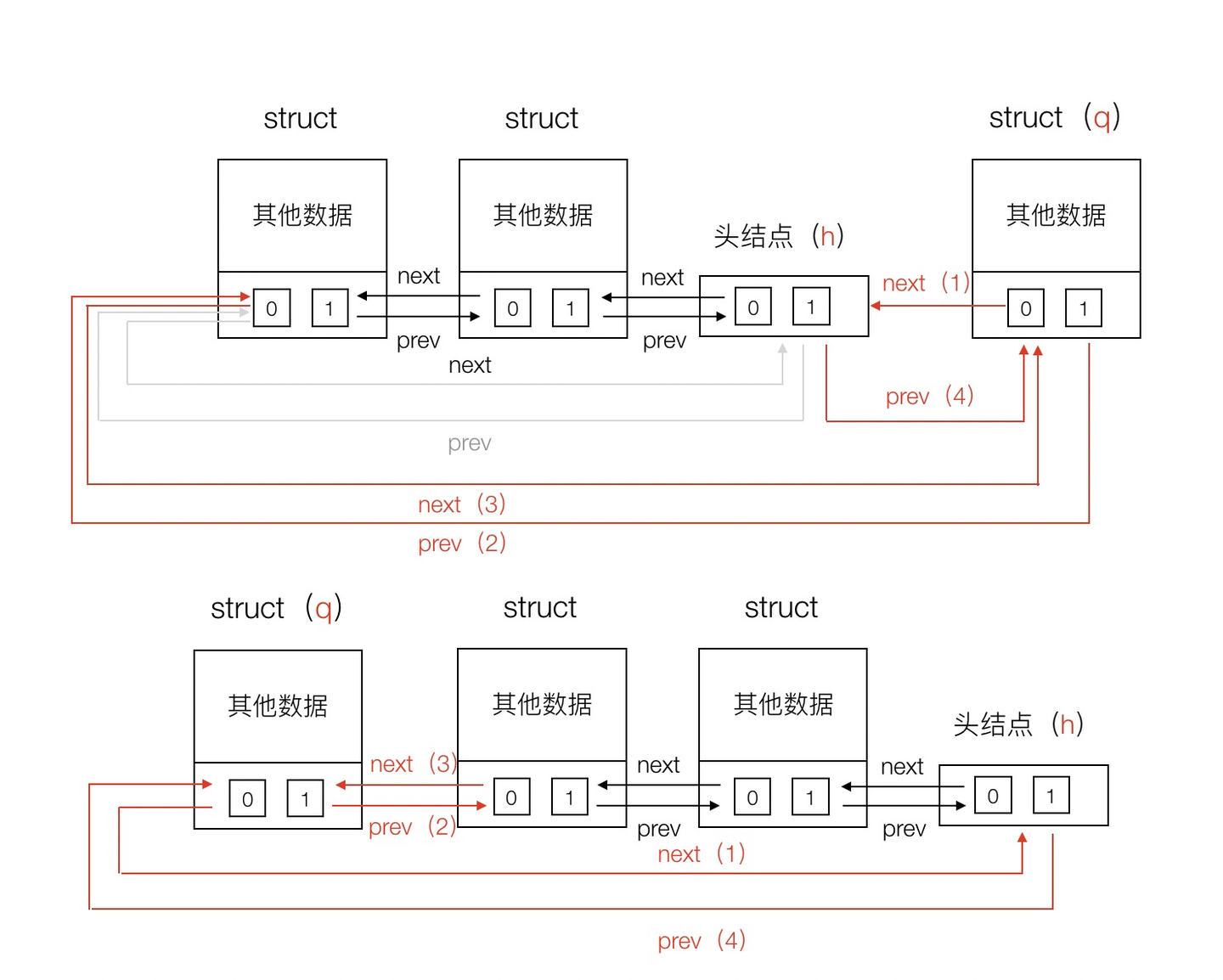

1 | typedef void* QUEUE[2] |

参考

https://zhuanlan.zhihu.com/p/83765851

https://blog.butonly.com/posts/node.js/libuv/6-libuv-stream/

https://github.com/libuv/help/issues/62

https://blog.butonly.com/posts/node.js/libuv/7-libuv-async/